#129 - Why should you believe in the wisdom of the crowd? And when should you not?

Getting more of your team and your organization

Hello 👋

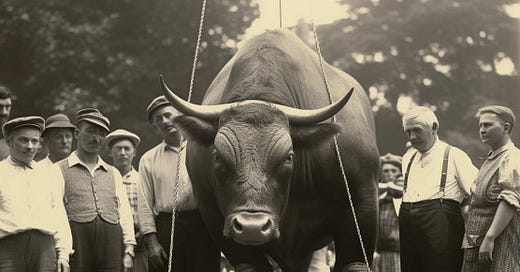

Let me take you to 1906. Plymouth, England. Country fair. Some livestock and poultry show, actually. A fat ox. A Joe-Biden-old statistician has an itchy idea that he wishes to scratch. But it’s not easy to pull off what he has in mind. So he comes up with a ruse.

📢📢A quick ask for help. Here’s a short survey that I would love you to take. It’s only a couple of minutes and I would be much obliged.

So: what our gray statistician finds after he’s pulled off the heist is the reason behind the success of Internet search engines such as Google or UGC-powered sites such as Reddit, Quora, or Stack Exchange.

He answers a simple question: Why and when should one seek different perspectives?

This newsletter has been tooting the horn on going out and talking to different people and seeking diverse perspectives. Go farm for dissent, go socialize your idea. Implicit in the goading is the premise that more perspectives are better.

The advice is almost a cliche but do you know why? I mean, what exactly happens that elevates a group’s perspective above an individual’s?

I will not assume the answer is obvious. Look down the rabbit holes of social media and you’ll have more than enough of collective madness.

Look up literature and you have a number of books that talk up the value of perspective-taking. I have found none that unpeel the idea behind the wisdom of the crowd as well as Philip Tetlock and Dan Gardner in Superforecasting. There’s also an additional nuance in seeking the wisdom of the collective—not all collectives are the same. In fact, some crowds, no matter how big, are not worth consulting at all if they lack a crucial attribute.

The why and the when follow.

First, the why.

There’s no Google Trends data on the phrase but it is known that something curious happened in 1906 to have thrust the idea of the wisdom of the crowd into the spotlight. Wikipedia (this is a meta ode to the subject of our discussion, as it exists today as the de facto wisdom-of-the-crowd repository) says:

At a 1906 country fair in Plymouth, 800 people participated in a contest to estimate the weight of a slaughtered and dressed ox. Statistician Francis Galton observed that the median guess, 1207 pounds, was accurate within 1% of the true weight of 1198 pounds.

Wikipedia doesn’t do justice to Galton’s cleverly designed experiment masquerading as a weight-judging competition. He knew at the bull and ox fair no one would have given two hoots about a statistician’s hunch. But he thought they might be interested in a competition that promised impressive prizes for the winner. He also didn’t want any country-fair merrymaker to take a guess. He wanted folks who knew a thing or two about livestock weight to self-select themselves. So, he levied a six-penny participation fee. That weeded out the novices.

I read Galton’s account published in Nature and he said he had to take out 13 entries out of 800 because they were illegible. So, 787 people took a guess each at the weight of a live ox when cut and dressed and their median guess turned out to be a hair’s width apart from the correct figure.

A freaky coincidence? Fluke? Here’s the underlying principle.

When individuals make an estimate they do so on the basis of a narrow set of attributes they’re familiar with. ‘We canter toward forming a generalized overall impression based on a narrow dimension judged early.’ - I wrote last week and I think it explains how most of us get to our forecasts. The information on the narrow dimension we understand is useful indeed but, extrapolated, it distorts the picture. Yet, add up all the narrow bits of information from different sources and you get an aggregated and wholly accurate picture.

Next, the specific explanation of what happened on that day at the country fair in 1907. Here I quote from Superforecasting:

When a butcher looked at the ox, he contributed the information he possessed thanks to years of training and experience. When a man who regularly bought meat at the butcher’s store made his guess, he added a little more. A third person, who remembered how much the ox weighed at last year’s fair, did the same.

—but, but, you object. Did the crowd also not contribute their biases to the mix? Absolutely they did. The difference in individual biases—the exaggerations and the underestimations—canceled each other out. The accurate bits were all left behind in the estimate. Some in the group of 787 guessers must have guessed higher, and some lower. Aggregated, they landed right next to the correct number.

Now, the when.

When should we consult crowds for their wisdom?

How would our good statistician Francis Galton’s experiment have turned out had he chosen as the venue for his experiment the annual symposium for vegetarians, vegans, and almond-milk drinkers?

I’ve a feeling the crowd would have let him down. Aggregation of views of people with no mental model for the weight of cattle would have been aggregation of junk. The wisdom of the crowd banks on the judgment of individuals in the crowd. Back to Superforecasting:

Aggregating the judgments of many people who know nothing produces nothing. Aggregating the judgments of people who know a little is better, and if there are enough of them, it can produce impressive results, but aggregating the judgments of an equal number of people who know lots about lots of different things is most effective because the collective pool of information becomes much bigger.

‘People who know lots about lots of different things’ — does that define your team or group? If your team is a collection of more of the same, it’s possible that you may find collective wisdom elusive. There would be too much of an overlapping set of beliefs and expertise. Closer to an echo chamber than to an idea lab.

Thus far I’ve tried making the point that perhaps we should not sweat about seeking the counsel of any Tom, Dick, or Harry. Instead, we should make an effort to talk to people who have some degree of relevant expertise. And to talk to many of them. These may be members of your team or organization, or from outside. Where they are from is less of a concern as long as they’ve some familiarity with the problem you’re seeking wisdom for because, with enough numbers, their misconceptions are likely to fall by the wayside. What is left is for you to synthesize/triangulate a single, composite version of reality.

The Internet tells me that it was Jim Rohn, the motivational speaker, who first said that we’re the average of the five people we hang out most with. I’m not sure if he knew of Galton’s experiment but it would be fun if it turned out that he said so because he understood that we are influenced in no small measure by the wisdom of the crowd around us.

All hail vox populi!

📚Additional reading:

📢📢Before we sign off, a quick ask for help. Here’s a short survey that I would love you to take. It’s only a couple of minutes and I would be much obliged. Until mid-week…